Nancy S. Jecker, University of Washington and Andrew Ko, University of Washington

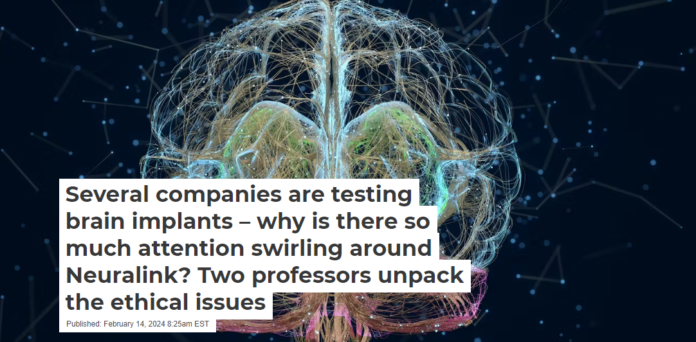

Putting a computer inside someone’s brain used to feel like the edge of science fiction. Today, it’s a reality. Academic and commercial groups are testing “brain-computer interface” devices to enable people with disabilities to function more independently. Yet Elon Musk’s company, Neuralink, has put this technology front and center in debates about safety, ethics and neuroscience.

In January 2024, Musk announced that Neuralink implanted its first chip in a human subject’s brain. The Conversation reached out to two scholars at the University of Washington School of Medicine – Nancy Jecker, a bioethicst, and Andrew Ko, a neurosurgeon who implants brain chip devices – for their thoughts on the ethics of this new horizon in neuroscience.

How does a brain chip work?

Neuralink’s coin-size device, called N1, is designed to enable patients to carry out actions just by concentrating on them, without moving their bodies.

Subjects in the company’s PRIME study – short for Precise Robotically Implanted Brain-Computer Interface – undergo surgery to place the device in a part of the brain that controls movement. The chip records and processes the brain’s electrical activity, then transmits this data to an external device, such as a phone or computer.

The external device “decodes” the patient’s brain activity, learning to associate certain patterns with the patient’s goal: moving a computer cursor up a screen, for example. Over time, the software can recognize a pattern of neural firing that consistently occurs while the participant is imagining that task, and then execute the task for the person.

Neuralink’s current trial is focused on helping people with paralyzed limbs control computers or smartphones. Brain-computer interfaces, commonly called BCIs, can also be used to control devices such as wheelchairs.

A few companies are testing BCIs. What’s different about Neuralink?

Noninvasive devices positioned on the outside of a person’s head have been used in clinical trials for a long time, but they have not received approval from the Food and Drug Administration for commercial development.

There are other brain-computer devices, like Neuralink’s, that are fully implanted and wireless. However, the N1 implant combines more technologies in a single device: It can target individual neurons, record from thousands of sites in the brain and recharge its small battery wirelessly. These are important advances that could produce better outcomes.

Why is Neuralink drawing criticism?

Neuralink received FDA approval for human trials in May 2023. Musk announced the company’s first human trial on his social media platform, X – formerly Twitter – in January 2024.

Information about the implant, however, is scarce, aside from a brochure aimed at recruiting trial subjects. Neuralink did not register at ClinicalTrials.gov, as is customary, and required by some academic journals.

Some scientists are troubled by this lack of transparency. Sharing information about clinical trials is important because it helps other investigators learn about areas related to their research and can improve patient care. Academic journals can also be biased toward positive results, preventing researchers from learning from unsuccessful experiments.

Fellows at the Hastings Center, a bioethics think tank, have warned that Musk’s brand of “science by press release, while increasingly common, is not science.” They advise against relying on someone with a huge financial stake in a research outcome to function as the sole source of information.

When scientific research is funded by government agencies or philanthropic groups, its aim is to promote the public good. Neuralink, on the other hand, embodies a private equity model, which is becoming more common in science. Firms pooling funds from private investors to back science breakthroughs may strive to do good, but they also strive to maximize profits, which can conflict with patients’ best interests.

In 2022, the U.S. Department of Agriculture investigated animal cruelty at Neuralink, according to a Reuters report, after employees accused the company of rushing tests and botching procedures on test animals in a race for results. The agency’s inspection found no breaches, according to a letter from the USDA secretary to lawmakers, which Reuters reviewed. However, the secretary did note an “adverse surgical event” in 2019 that Neuralink had self-reported.

In a separate incident also reported by Reuters, the Department of Transportation fined Neuralink for violating rules about transporting hazardous materials, including a flammable liquid.

What other ethical issues does Neuralink’s trial raise?

When brain-computer interfaces are used to help patients who suffer from disabling conditions function more independently, such as by helping them communicate or move about, this can profoundly improve their quality of life. In particular, it helps people recover a sense of their own agency or autonomy – one of the key tenets of medical ethics.

However well-intentioned, medical interventions can produce unintended consequences. With BCIs, scientists and ethicists are particularly concerned about the potential for identity theft, password hacking and blackmail. Given how the devices access users’ thoughts, there is also the possibility that their autonomy could be manipulated by third parties.

The ethics of medicine requires physicians to help patients, while minimizing potential harm. In addition to errors and privacy risks, scientists worry about potential adverse effects of a completely implanted device like Neuralink, since device components are not easily replaced after implantation.

When considering any invasive medical intervention, patients, providers and developers seek a balance between risk and benefit. At current levels of safety and reliability, the benefit of a permanent implant would have to be large to justify the uncertain risks.

What’s next?

For now, Neuralink’s trials are focused on patients with paralysis. Musk has said his ultimate goal for BCIs, however, is to help humanity – including healthy people – “keep pace” with artificial intelligence.

This raises questions about another core tenet of medical ethics: justice. Some types of supercharged brain-computer synthesis could exacerbate social inequalities if only wealthy citizens have access to enhancements.

What is more immediately concerning, however, is the possibility that the device could be increasingly shown to be helpful for people with disabilities, but become unavailable due to loss of research funding. For patients whose access to a device is tied to a research study, the prospect of losing access after the study ends can be devastating. This raises thorny questions about whether it is ever ethical to provide early access to breakthrough medical interventions prior to their receiving full FDA approval.

Clear ethical and legal guidelines are needed to ensure the benefits that stem from scientific innovations like Neuralink’s brain chip are balanced against patient safety and societal good.

Nancy S. Jecker, Professor of Bioethics and Humanities, School of Medicine, University of Washington and Andrew Ko, Assistant Professor of Neurological Surgery, School of Medicine, University of Washington

This article is republished from The Conversation under a Creative Commons license. Read the original article.